What is enterprise AI?

Enterprise artificial intelligence (AI) is the adoption of advanced AI technologies within large organizations. Taking AI systems from prototype to production introduces several challenges around scale, performance, data governance, ethics, and regulatory compliance. Enterprise AI includes policies, strategies, infrastructure, and technologies for widespread AI use within a large organization. Even though it requires significant investment and effort, enterprise AI is important for large organizations as AI systems become more mainstream.

What is an enterprise AI platform?

An enterprise AI platform is an integrated group of technologies that allow organizations to experiment, develop, deploy, and operate AI applications at scale. Deep learning models are the core of any AI application. Enterprise AI requires higher AI model reuse between tasks rather than training a model from scratch each time there is a new problem or dataset. An enterprise AI platform provides the necessary infrastructure to reuse, productionize, and run deep learning models at scale across the organization. It is a complete, end-to-end, stable, resilient, and repeatable system that provides sustainable value while remaining flexible for continuous improvement and changing environments.

What are the benefits of enterprise AI?

When you implement enterprise AI, you can solve previously unsolvable challenges. It helps you drive new revenue sources and efficiencies in a large organization.

Drive innovation

Large enterprises typically have several hundred business teams, but not all have the budget and resources for data science skills. Enterprise-scale AI allows leadership to democratize artificial intelligence and machine learning (AI/ML) technologies and make them more accessible across the company. Anyone in the organization can suggest, experiment with, and incorporate AI tools into their business processes. Domain experts with business knowledge can contribute to AI projects and lead digital transformation.

Enhance governance

Siloed approaches to AI development provide limited visibility and governance. Siloed approaches reduce stakeholder trust and limit AI adoption—especially in critical decision-making predictions.

Enterprise AI brings transparency and control to the process. Organizations can control sensitive data access according to regulatory requirements while encouraging innovation. Data science teams can use explainable AI approaches to bring transparency to AI decision-making and increase end-user trust.

Reduce costs

Cost management for AI projects requires careful control over development effort, time, and computing resources, especially during training. An enterprise AI strategy can automate and standardize repetitive engineering efforts within the organization. AI projects get centralized and scalable access to computing resources while ensuring no overlap or wastage. You can optimize resource allocation, reduce errors, and improve process efficiencies over time.

Increase productivity

By automating routine tasks, AI can reduce time wastage and free human resources for more creative and productive work. Adding intelligence to enterprise software can also increase the speed of business operations, reducing the time needed between different stages in any enterprise activity. A shortened timeline from design to commercialization or production to delivery can provide an immediate return on investment.

What are the use cases of enterprise AI?

Enterprise AI applications can optimize everything from supply chain management to fraud detection and customer relationship management. Next, we give some examples with case studies.

Research and development

Organizations can analyze vast datasets, predict trends, and simulate outcomes to significantly reduce the time and resources required for product development. AI models can identify patterns and insights from past product successes and failures, guiding the development of future offerings. They can also support collaborative innovation so teams across different geographies work more effectively on complex projects.

For example, AstraZeneca, a global pharmaceutical brand, created an AI-driven drug discovery platform to increase quality and reduce the time it takes to discover a potential drug candidate.

Asset management

AI technologies optimize the acquisition, use, and disposal of physical and digital assets within an organization. For example, predictive maintenance algorithms can predict when equipment or machinery will likely fail or require maintenance. They can suggest operational adjustments for machinery to improve efficiency, reduce energy consumption, or extend the asset's life. Through AI-powered tracking systems, organizations gain real-time visibility into the location and status of their assets.

For example, Baxter International Inc., a global medical technology leader, uses AI to reduce unplanned equipment downtime, preventing over 500 machine hours of unplanned downtime in just one facility.

Customer service

AI can provide personalized, efficient, and scalable customer interactions. AI-powered chatbots and virtual assistants handle many customer inquiries without human intervention. AI can also analyze vast customer data in real-time, enabling businesses to offer personalized recommendations and support.

For example, T-Mobile, a global telecom company, uses AI to increase the speed and quality of customer interactions. Human agents serve customers better and faster, enriching the customer experience and creating stronger human-to-human connections.

What are the key technology considerations in enterprise AI?

Successfully deploying enterprise AI requires organizations to implement the following.

Data management

AI projects require easy and secure access to enterprise data assets. Organizations must build up their data engineering pipelines, whether streaming or batch data processing, data mesh, or data warehousing. They must ensure systems like data catalogs are in place so data scientists can quickly find and use the data sets they need. Centralized data governance mechanisms regulate data access and support risk management without creating unnecessary obstacles in data retrieval.

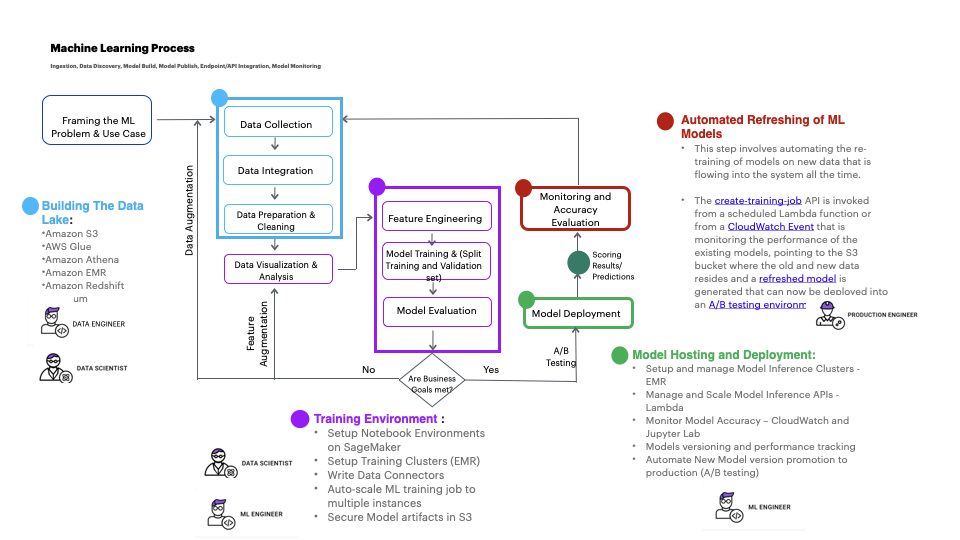

Model training infrastructure

Organizations must establish a centralized infrastructure to build and train new and existing machine learning models. For example, feature engineering involves extracting and transforming variables or features, such as price lists and product descriptions, from raw data for training. A centralized feature store allows different teams to collaborate, promoting reuse and avoiding silos with duplicate work efforts.

Similarly, systems that support retrieval-augmented generation (RAG) are needed so that data science teams can adapt existing AI models with internal enterprise data. Large language models (LLMs) are trained on vast data volumes and use billions of parameters to generate original output. You can use them for tasks like answering questions, translating languages, and natural language processing. RAG extends the already powerful capabilities of LLMs to specific domains or an organization's internal knowledge base, all without the need to retrain the model.

Central model registry

A central model registry is an enterprise catalog for LLMs and machine learning models built and trained across different business units. It allows model versioning, which lets teams accomplish many tasks:

- Track model iterations over time

- Compare performance across different versions

- Ensure that deployments are using the most effective and up-to-date versions

Teams can also maintain detailed records of model metadata, including training data, parameters, performance metrics, and usage rights. This enhances collaboration among teams and streamlines the governance, compliance, and auditability of AI models.

Model deployment

Practices like MLOps and LLMOps introduce operational efficiency to enterprise AI development. They apply the principles of DevOps to the unique challenges of AI and machine learning.

For example, you can automate various ML and LLM lifecycle stages, such as data preparation, model training, testing, and deployment, to reduce manual errors. Building ML and LLM operational pipelines facilitates continuous integration and delivery (CI/CD) of AI models. Teams can rapidly iterate and update models based on real-time feedback and changing requirements.

Model monitoring

Monitoring is crucial to managing AI models, ensuring the reliability, accuracy, and relevance of AI-generated content over time. AI models are prone to hallucinate or occasionally generate inaccurate information. Model output can also become irrelevant due to evolving data and contexts.

Organizations must implement human in the loop mechanisms to effectively manage LLM output. Domain experts periodically assess AI output to ensure its accuracy and appropriateness. Using real-time feedback from end users, organizations can maintain the integrity of the AI model and ensure it meets the evolving needs of stakeholders.

How can AWS support your enterprise AI strategy?

Amazon Web Services (AWS) offers the easiest way to build and scale AI applications, grounded in model choice and flexibility. We've helped enterprises adopt AI systems across every line of business, with end-to-end security, privacy, and AI governance.

Choose from the broadest and deepest set of services that match your business needs. You can find end-to-end solutions and pre-trained AI services or build your own enterprise AI platforms and models on fully managed infrastructures.

AWS pre-trained AI services

AWS pre-trained AI services provide ready-made intelligence for your applications and workflows. For example, you can use Amazon Rekognition for image and video analysis, Amazon Lex for conversational interfaces, or Amazon Kendra for enterprise search. You can get quality and accuracy from continuously learning APIs without training or deploying models.

Amazon Bedrock

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies through a single API. It also provides a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Using Amazon Bedrock, you can easily experiment with and evaluate top FMs for your use case. Then, you can privately customize them with your data using techniques such as fine-tuning and retrieval-augmented generation (RAG). And you can build agents that execute tasks using your enterprise systems and data sources.

Amazon SageMaker

Amazon SageMaker is a fully managed service that combines a broad set of tools to enable high-performance, low-cost deep learning for any use case. With SageMaker, you can build, train, and deploy deep learning models at scale. You use tools like notebooks, debuggers, profilers, and pipelines all in one integrated development environment (IDE).

AWS Deep Learning AMIs

AWS Deep Learning AMIs (DLAMI) provide enterprise AI researchers with a curated and secure set of frameworks, dependencies, and tools. These accelerate deep learning on Amazon Elastic Compute Cloud (Amazon EC2).

An Amazon Machine Image (AMI) is an image provided by AWS that provides the information required to launch an instance. Built for Amazon Linux and Ubuntu, AMIs are preconfigured with the following:

- TensorFlow

- PyTorch

- NVIDIA CUDA drivers and libraries

- Intel MKL

- Elastic Fabric Adapter (EFA)

- aws-ofi-nccl plugin

These help you to quickly deploy and run AI frameworks and tools at scale.

Get started with enterprise AI on AWS by creating an account today.